One thing to stay up for: Pricing for Nvidia’s Blackwell platform has emerged in dribs and drabs, from analyst estimates to CEO Jensen Huang’s feedback. Merely put, it will price patrons dearly to deploy these performance-packed merchandise. Morgan Stanley estimates that Nvidia will ship 60,000 to 70,000 B200 server cupboards in 2025, translating to at the very least $210 billion in annual income. Regardless of the excessive prices, the demand for these highly effective AI servers stays intense.

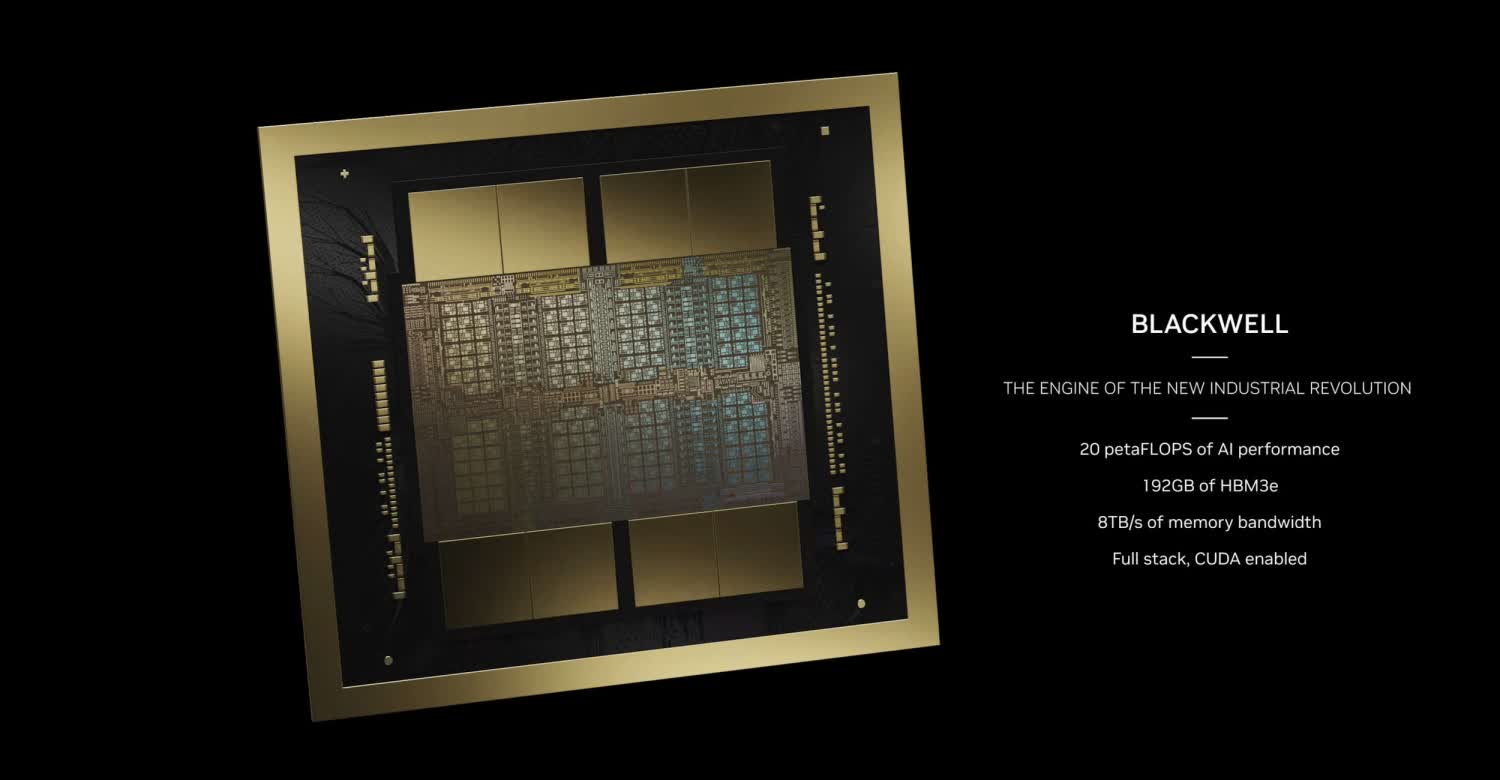

Nvidia has reportedly invested some $10 billion growing the Blackwell platform – an effort involving round 25,000 individuals. With all of the efficiency packed right into a single Blackwell GPU, it is no shock these merchandise command important premiums.

In line with HSBC analysts, Nvidia’s GB200 NVL36 server rack system will price $1.8 million, and the NVL72 will likely be $3 million. The extra highly effective GB200 Superchip, which mixes CPUs and GPUs, is anticipated to price $60,000 to $70,000 every. These Superchips embrace two GB100 GPUs and a single Grace Hopper chip, accompanied by a big pool of system reminiscence (HBM3E).

Earlier this 12 months, CEO Jensen Huang informed CNBC {that a} Blackwell GPU would price $30,000 to $40,000, and based mostly on this info Morgan Stanley has calculated the full price to patrons. With every AI server cupboard priced at roughly $2 million to $3 million, and Nvidia planning to ship between 60,000 and 70,000 B200 server cupboards, the estimated annual income is at the very least $210 billion.

However will buyer spend justify this sooner or later? Sequoia Capital analyst David Cahn estimated that the annual AI income required to pay for his or her investments has climbed to $600 billion… yearly.

HSBC estimates pricing for NVIDIA GB200 NVL36 server rack system is $1.8 million and NVL72 is $3 million. Additionally estimates GB200 ASP is $60,000 to $70,000 and B100 ASP is $30,000 to $35,000.

– tae kim (@firstadopter) Might 13, 2024

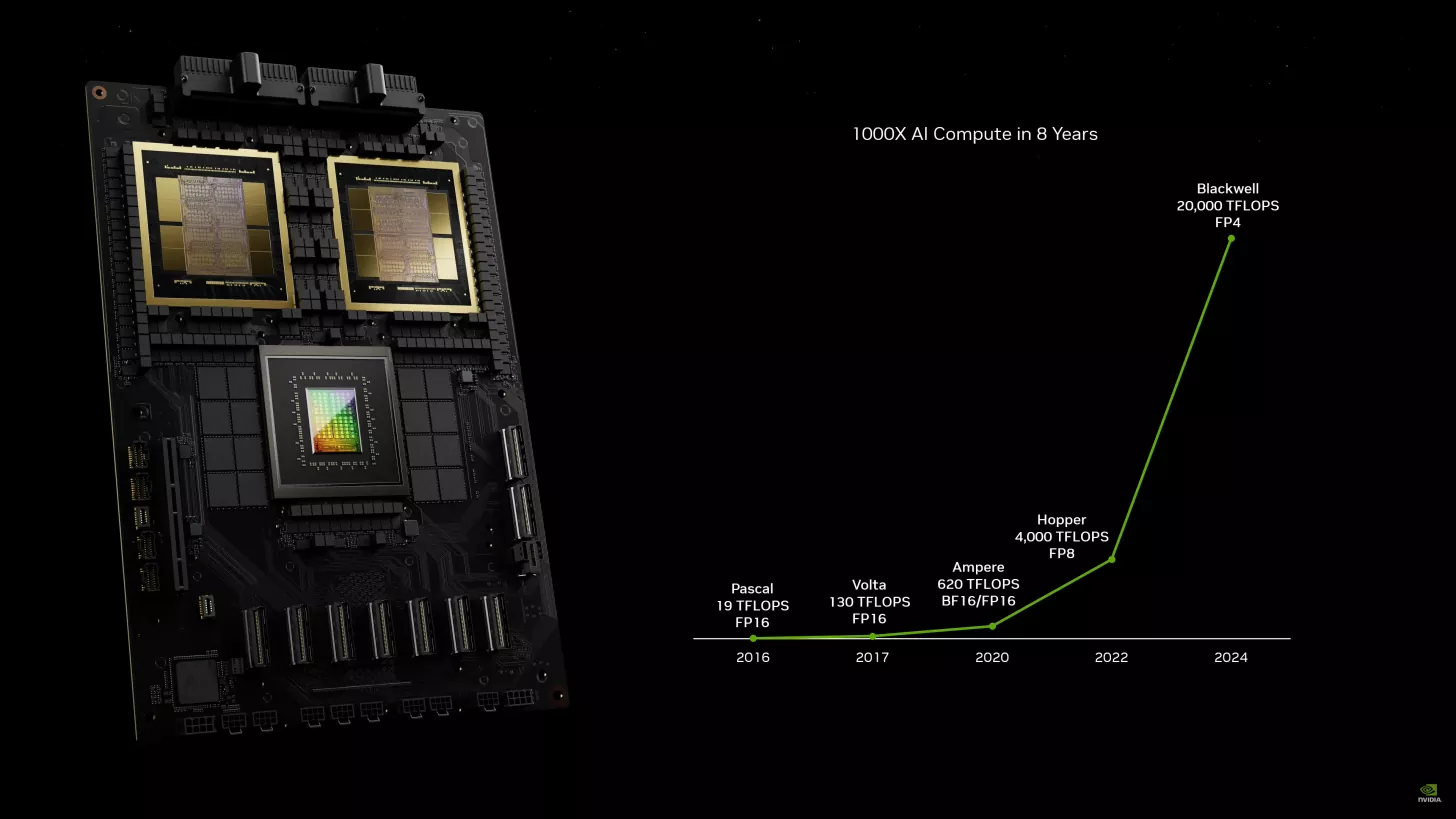

However for now there’s little doubt that firms can pay the value, regardless of how painful. The B200, with 208 billion transistors, can ship as much as 20 petaflops of FP4 compute energy. It might take 8,000 Hopper GPUs consuming 15 megawatts of energy to coach a 1.8 trillion-parameter mannequin.

Such a job would require 2,000 Blackwell GPUs, consuming solely 4 megawatts. The GB200 Superchip affords 30 occasions the efficiency of an H100 GPU for big language mannequin inference workloads and considerably reduces energy consumption.

Because of excessive demand, Nvidia is growing its orders with TSMC by roughly 25%, in keeping with Morgan Stanley. It’s no stretch to say that Blackwell – designed to energy a spread of next-gen functions, together with robotics, self-driving vehicles, engineering simulations, and healthcare merchandise – will turn out to be the de facto commonplace for AI coaching and plenty of inference workloads.