Welcome to the primary a part of my 12-part sequence, which can stroll you thru the steps emigrate VMware VMs to Hyper-V utilizing SCVMM. I wrote this sequence to be appropriate for a manufacturing or lab atmosphere.

This sequence can be appropriate for deploying a Hyper-V/VMM cluster.

On this first half, you’ll plan your atmosphere.

The duties on this first a part of the hands-on deployment information are for planning. Aside from downloading and making ready the required set up recordsdata, all subsequent duties information you thru planning your deployment. Hyper-V Clustering/SCVMM deployment is advanced, so planning is important to make sure a profitable deployment.

Activity 1: Obtain Set up Recordsdata

Earlier than downloading the required set up recordsdata, create the folders to save lots of the recordsdata utilizing this PowerShell script.

Run this script on a server the place you plan to save lots of the set up recordsdata. You want about 10 GB of free area on the drive the place you create these folders for storing the set up recordsdata, which you’ll obtain shortly.

Get-Disk #lists all accessible disks within the server

Get-Partition -DiskNumber 1 #shows the partition

New-Merchandise -Title Hyper-VFiles -ItemType Listing -Path E: #create a folder in drive E:#Create subfolders within the Hyper-VFiles folder

#Change the Path parameter to the folder“WinServer22”, “SQLServer22”, “SCVMMServer22”, “Drivers”, "SCVMM UR2", "WAC", "Veeam" | foreach-object {

New-Merchandise -Title $_ -ItemType Listing -Path E:Hyper-VFiles }#Create the share

$Parameters = @{

Title="Hyper-VFiles"

Path="E:Hyper-VFiles"

FullAccess="labAdministrator" }

New-SmbShare @Parameters

Obtain the next recordsdata and save them within the folders created with the above PowerShell script:

Obtain the next recordsdata and save them within the folders created with the above PowerShell script:

- Home windows Server 2022 ISO Picture

– this hyperlink downloads an analysis copy of Home windows

– When you’re putting in in a manufacturing, set up a retail copy

– I came upon that changing a Home windows Server 2022 Customary Server Core to full version didn’t work as of August 2024 after I wrote this information

– The conversion works if you happen to set up an analysis copy with the Desktop Expertise (full GUI). - SQL Server 2022 Exe

– this hyperlink downloads an analysis copy of SQL Server 2022 - SCVMM prerequisite instruments

a) Home windows ADK for Home windows 10, model 1809 10.1.17763.1

b) Home windows PE add-on for ADK, model 1809 10.1.17763.1

c) Microsoft ODBC Driver 17 for SQL Server

e) SQL Server Administration Studio

f) Microsoft Visible C++ 2013 Redistributable (x86) – 12.0.30501

g) Visible C++ 2013 Redistributable (x64) - SCVMM exe

– this hyperlink downloads an analysis copy of SCVMM 2022 - Veeam Backup and Replication

a) Requires a enterprise electronic mail to obtain

b) Obtain the Veeam set up recordsdata

c) Request for a 30-day trial license - SCVMM UR2

a) SCVMM Replace Rollup 2

b) SCVMM Admin Console R2 Replace

c) SCVMM Visitor Agent R2 Replace

d) Registry key script

Once you obtain the recordsdata, rename them as proven in a), b), and c) - Home windows Admin Heart

a) When you’re deploying Hyper-V on Home windows Server Core, you require WAD to handle the servers

b) Home windows Admin Heart is free

Activity 2: Put together Set up Recordsdata

The SCVMM and Microsoft SQL downloads from the Activity 1 part are executables. I’ll present you find out how to create the set up recordsdata utilizing the downloaded executables within the two subsections under.

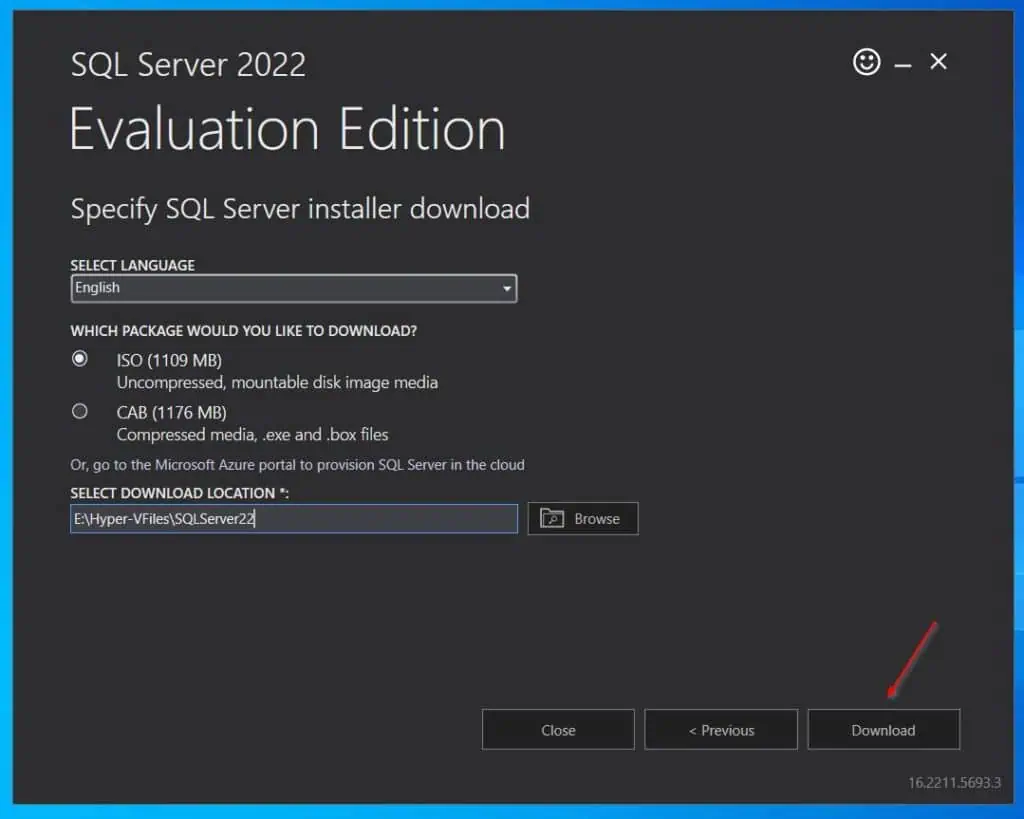

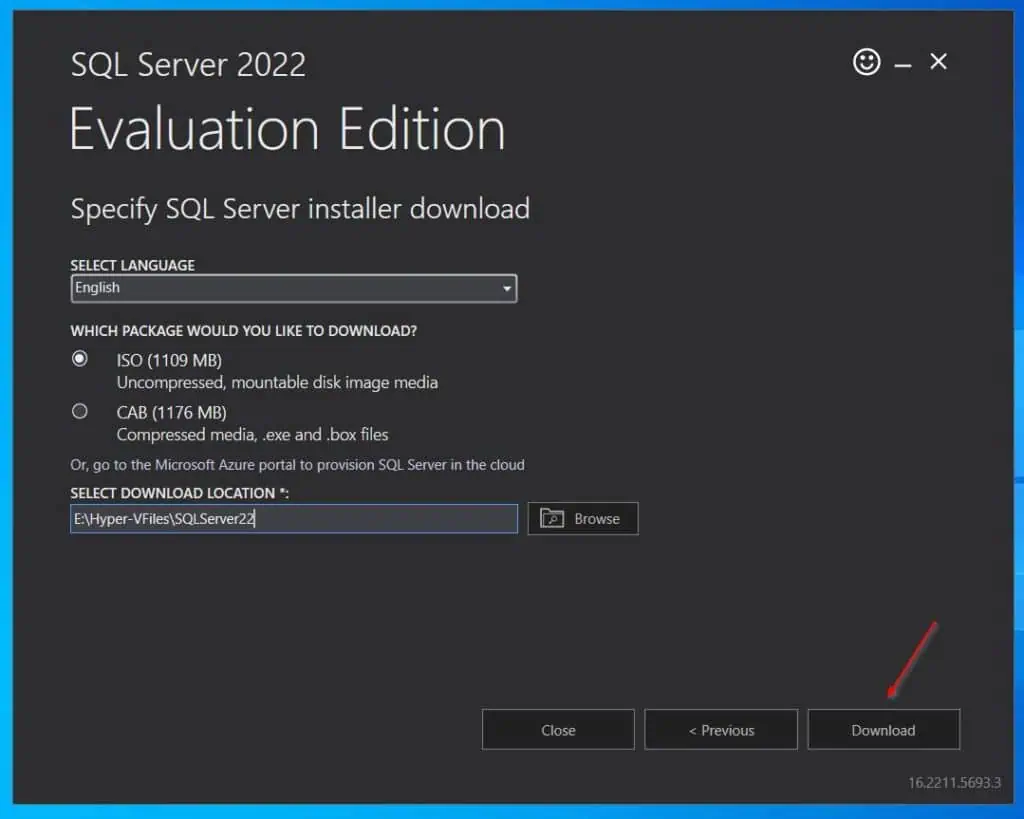

Activity 2.1: Obtain the SQL ISO Picture

Ideally, it is best to be capable to set up SQL with the downloaded executable file. Nevertheless, after I tried doing that, I bought the error message, “SQL server 2020 oops, a required file couldn’t be downloaded.”

The walkaround is to obtain the ISO picture of the SQL Server set up. Listed below are the steps:

- Double-click the executable file.

- Then, on the primary web page of the wizard, choose Obtain Media.

- After that, the Specify SQL Server Set up Obtain display screen masses. Choose the language, the bundle as ISO, after which the trail to save lots of the ISO picture.

Once you end getting into the main points and alternatives, click on the Obtain button.

It’s best to see an ISO file when the obtain is accomplished – see my screenshot under.

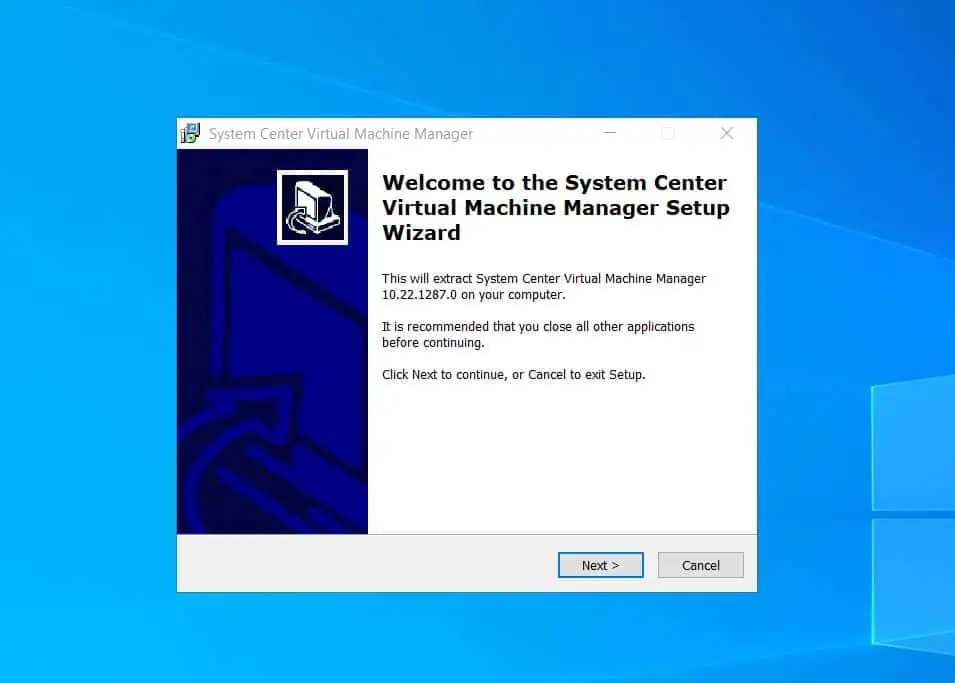

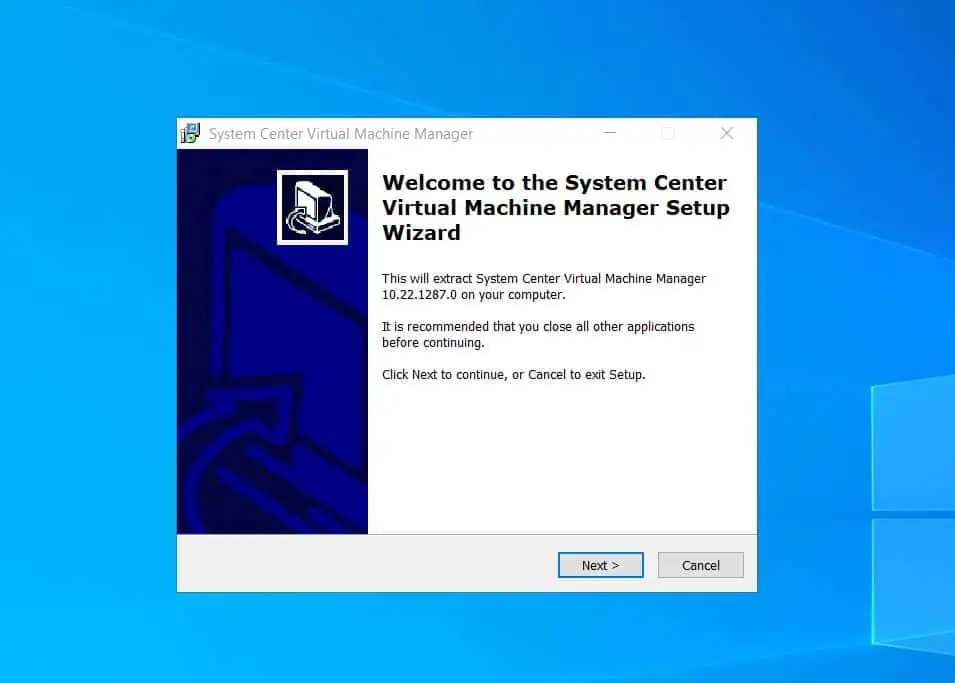

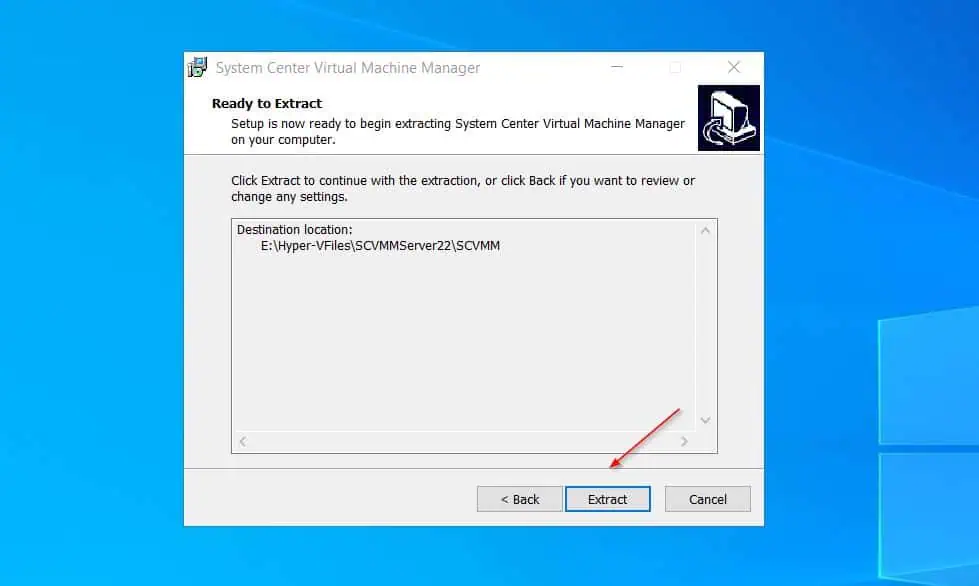

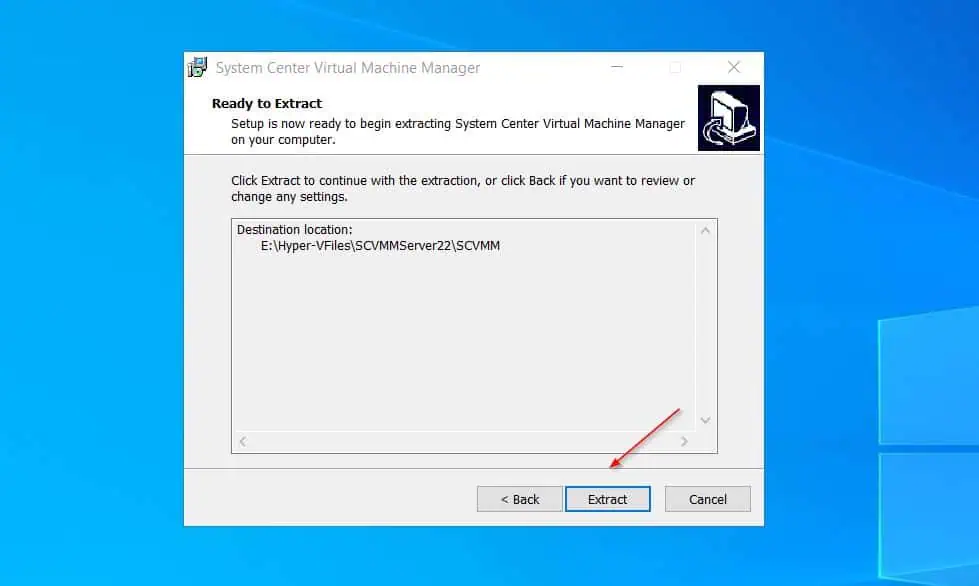

- Double-click the SCVMM executable file you downloaded earlier.

- After that, click on Subsequent on the primary web page, after which settle for the license settlement. Then, enter the trail to retailer the SCVMM set up recordsdata.

To make it straightforward so that you can find the set up recordsdata and separate them from different recordsdata, enter a folder identify – I used SCVMM – after the principle SCVMMServer22 folder.

- Lastly, to extract the set up recordsdata, click on the Extract button.

Activity 3: Plan Servers and Community Interface Playing cards

For this undertaking, you require a minimum of two bodily servers. The 2 servers will probably be used to create a Hyper-V cluster, which can finally be managed by the Service Heart Digital Supervisor (SCVMM).

In manufacturing, every server ought to have two community adapters linked to totally different VLANs—extra on VLANs shortly. Furthermore, every community adapter on a VLAN ought to be linked to separate bodily switches to offer redundancy.

The place potential, additionally plan for community card redundancy. By this, I imply that you shouldn’t use two ports on the identical bodily adapters for teaming – if the adapter fails, the server loses connection to that VLAN, and the service it gives turns into unavailable.

Having mentioned all that, I’ll use an HP ENVY x360 Convertible laptop computer and an HP EliteDesk 800 G2 SFF in my house check lab as my Hyper-V hosts. I even have a Dell Latitude E7470 operating Home windows Server 2022 Customary that gives iSCSI storage.

If you must purchase these (or related gadgets) for a house lab setup, the desk under specifies the specs. The Specs and configuration desk under:

Whether or not deploying in a manufacturing atmosphere or constructing a lab, use these tables to plan your {hardware} and outline the host’s community settings.

| Make/Mannequin | Complete RAM | CPU | Storage |

|---|---|---|---|

| HP ENVY x360 Convertible | 32 GB | Intel Core i7-7500U 2.70GHz | 1×250 GBSSD, 1×1 TB HDD |

| HP EliteDesk 800 G2 SFF | 32 GB | Intel Core i5-6500 3.2 GHz | 1×256 GB SSD |

| Dell Latitude E7470 | 16 GB | Intel Core i7-6600U 2.60GHz | 1x 2 TB SSD (inside), 2x 1 TB exterior USB disks |

This desk lists the hostname and community settings of the three hosts.

| Laptop Make/Mannequin | Host Title | IP Deal with | DNS Server |

|---|---|---|---|

| HP ENVY x360 Convertible | IPMpHPV4 | 192.168.0.104 | 192.168.0.80 |

| HP EliteDesk 800 G2 SFF | IPMpHPV5 | 192.168.0.105 | 192.168.0.80 |

| Dell Latitude E7470 | IPMpiSCSI2 | 192.168.0.109 | 192.168.0.80 |

Activity 4: Plan Servers pNICs for Redundancy

When you’re deploying in a manufacturing atmosphere, it is best to have a number of bodily switches with VLANs for every kind of workload. For instance, there ought to be a distinct VLAN for Hyper-V host administration, cluster visitors, and stay migration.

Moreover, if you happen to use iSCSI, there ought to be a distinct VLAN for this visitors.

Earlier, I mentioned your manufacturing atmosphere ought to have a number of bodily switches (a minimum of 2). Having multiple swap permits for redundancy and avoids a single level of failure.

Talking of redundancy design, the bodily community interface playing cards (pNICs) on the servers (HyperV hosts) also needs to be designed for redundancy. Most servers ought to be geared up with a number of pNICs – every providing a number of ports.

To enhance throughput and supply additional redundancy, the NICs for every Hyper-V cluster workload ought to be teamed in pairs. Nevertheless, the NIC teaming ought to be deliberate to keep away from a single community card risking a single level of failure.

To keep away from this, the ports on the primary pNIC ought to be teamed with ports on the second pNIC.

Activity 5: Plan pNIC Teaming Configuration

Transferring on to the configuration of the bodily swap ports, Hyper-V teaming solely helps port trunking. Under is a pattern configuration from Change Configuration Examples for Microsoft SDN – Bodily port configuration.

Right here is the textual content from the above hyperlink – “Every bodily port should be configured to behave as a switchport and have the mode set to trunk to permit a number of VLANs to be despatched to the host. For RDMA Precedence-flow-control should be on and the service-policy should level to the enter queue that you’ll outline under.”

interface Ethernet1/3/1

pace 10000

priority-flow-control mode on

switchport mode trunk

switchport trunk native vlan VLAN_ID

switchport trunk allowed vlan VLAN_ID_RANGE

spanning-tree port kind edge trunk

service-policy kind queuing enter INPUT_QUEUING

no shutdown

Observe that Hyper-V teaming doesn’t help LACP. In case your community guys configure the ports on the swap as LACP, the Hyper-V teamed swap won’t work.

Talking of teamed switches, Hyper-V in Home windows Server 2022 helps a digital swap referred to as Switched Embedded Group (SET). This kind of nic teaming is configured and managed in Hyper-V.

Hyper-V SET operates in a switch-independent mode. Because of this it handles the teaming, not like in LACP, the place the bodily swap handles the teaming and cargo balancing.

After every thing I’ve mentioned about planning server pNIC configuration, bodily swap connections, and VLANs for redundancy, I’ll use a easy configuration in my lab. My house lab doesn’t have redundancy gadgets, as described on this and the final subsections.

So, the PCs I’m utilizing as my Hyper-V hosts every have one pNIC. This pNIC will serve all visitors on a single community, 192.168.0.0/24.

When you’re constructing a 2-node Hyper-V cluster in manufacturing, your hosts ought to have the next pNIC setup:

| Host Title | Default pNIC Names* | New pNIC Names | Community 1, Community 2 |

|---|---|---|---|

| IPMpHPV4 | Community 1,Community 2 | Mgt-pNIC-1, Mgt-pNIC-2 | Mgt-vSwitch |

| Community 3, Community 4 | Clu-pNIC-1, Clu-pNIC-2 |

Clu-vSwitch | |

| Community 5, Community 6 | Lmg-pNIC-1, Lmg-pNIC-2 | Lmg-vSwitch | |

| Community 7*** | Str-vSwitch | Str-vSwitch | |

| IPMpHPV5 | Community 1,Community 2 | Mgt-pNIC-1, Mgt-pNIC-2 | Mgt-vSwitch |

| Community 3,Community 4 | Clu-pNIC-1, Clu-pNIC-2 |

Clu-vSwitch | |

| Community 5,Community 6 | Lmg-pNIC-1, Lmg-pNIC-2 | Lmg-vSwitch | |

| Community 7 | Str-vSwitch | Str-vSwitch |

*I’m utilizing Community 1, Community 2, and many others, because the default names of the bodily community adapters. In actuality, the names will probably be totally different.

**See the subsequent part for the digital swap planning particulars

***That is for the iSCSI visitors. I’m assuming that the nic won’t be teamed

To plan your deployment, use the actual names of the pNICs in your Hyper-V servers. You don’t want to rename the NICs at this stage, as that will probably be accomplished later within the information.

For my Hyper-V lab, I’ve the simplified model of the configuration under:

| Host Title | Default pNIC Names* | New pNIC Names | Hyper-V SET Title** |

|---|---|---|---|

| IPMpHPV4 | Community | Mgt-pNIC | Mgt-vSwitch |

| IPMpHPV5 | Community | Mgt-pNIC | Mgt-vSwitch |

Activity 6: Plan Host Networking Configuration

Use the desk under to plan the community configuration of the Hyper-V hosts

| Host Title | IP Deal with | Subnet Masks | Default Gateway* | Most well-liked DNS |

|---|---|---|---|---|

| IPMpHPV4 | 192.168.0.101 | 255.255.255.0 | 192.168.0.1 | 192.168.0.80 |

| 192.168.1.11 | 255.255.255.0 | Not Relevant | Not Relevant | |

| 192.168.2.11 | 255.255.255.0 | Not Relevant | Not Relevant | |

| 192.168.3.12 | 255.255.255.0 | Not Relevant | Not Relevant | |

| IPMpHPV5 | 192.168.0.102 | 255.255.255.0 | 192.168.0.1 | 192.168.0.80 |

| 192.168.1.12 | 255.255.255.0 | Not Relevant | Not Relevant | |

| 192.168.2.12 | 255.255.255.0 | Not Relevant | Not Relevant | |

| 192.168.3.13 | 255.255.255.0 | Not Relevant | Not Relevant |

Use the desk under to plan the networking configuration for the cluster useful resource identify, VMM V, and Cluster File Server Networking.

| Useful resource Title | Useful resource Objective | VM IP deal with | Subnet Masks | Default Gateway* | Most well-liked DNS |

|---|---|---|---|---|---|

| IPMvVMM | VMM VM | 192.168.0.106 | 255.255.255.0 | 192.168.0.1 | 192.168.0.80 |

| lab-cluster-2 | Cluster useful resource identify | 192.168.0.107 | 255.255.255.0 | 192.168.0.1 | 192.168.0.80 |

| lab-vmm-lib | Cluster file server identify | 192.168.0.108 | 255.255.255.0 | 192.168.0.1 | 192.168.0.80 |

Activity 7: Plan Hyper-V Digital Switches and Storage

Having accomplished the server pNIC, the bodily swap configuration, and VLAN planning, it’s time to plan for the Hyper-V digital switches.

As I already hinted, if you happen to’re setting this in a manufacturing atmosphere, it is suggested to have teamed pNICs designated for various workloads. These teamed networks will connect with Hyper-V Change Embedded Groups digital switches.

The desk under reveals a pattern configuration of a typical prod atmosphere and the configuration for a house lab setup.

| Digital Change Title | Kind | Objective | Linked to pNIC |

|---|---|---|---|

| Mgt-vSwitch | Exterior | Administration/VM visitors | Teamed pNICs linked to the administration visitors VLAN |

| Clu-vSwitch | Exterior | Cluster visitors | Teamed pNICs linked to the cluster visitors VLAN |

| Lmg-vSwitch | Exterior | Reside Migration Site visitors | Teamed pNICs linked to the Reside Migration VLAN |

| Str-vSwitch | Exterior | iSCSI Site visitors | Teamed pNICs linked to the iSCSI visitors VLAN |

| Digital Change Title | Kind | Objective | Linked to pNIC |

|---|---|---|---|

| Mgt-vSwitch | Exterior | All visitors | A single pNIC linked to my house hub |

When you should not have separate VLANs for cluster and stay migration visitors, you might plan for a single vSwitch for the 2 workloads.

This desk, utilized in my lab, is for steerage functions. It’s best to use greater storage capacities for the VMM Shared Library and the Clustered Shared Quantity in manufacturing.

Nevertheless, for the Cluster Quorum witness disk, 1 GB is sufficient.

| iSCSI Digital disk identify | Dimension | Objective |

|---|---|---|

| QuorumvDisk | 1 GB | Cluster Quorum witness disk |

| VMMLibvDisk | 200GB | VMM Shared Library |

| CSVvDisk | 720GB | Clustered Shared Quantity |

Deploying a Hyper-V/SCVMM cluster is a posh course of involving a number of duties. Furthermore, some duties should be accomplished earlier than the subsequent ones. I’ve organized the duties on this information within the order that may guarantee seamless deployment. When you skip a step—together with the planning steps on this half—it’s virtually sure that you’ll run into issues later.

Congratulations! You’ve accomplished the planning a part of this hands-on information. Proceed to half 2 – Prep Hyper-V Hosts.