Firms like OpenAI and Google will let you know that the subsequent large step in generative AI experiences is nearly right here. ChatGPT’s large o1-preview improve is supposed to show that next-gen expertise. o1-preview, obtainable to ChatGPT Plus and different premium subscribers, can supposedly motive. Such an AI software needs to be extra helpful when looking for options to advanced questions that require advanced reasoning.

But when a brand new AI paper from Apple researchers is right in its conclusions, then ChatGPT o1 and all different genAI fashions can’t really motive. As a substitute, they’re merely matching patterns from their coaching information units. They’re fairly good at developing with options and solutions, sure. However that’s solely as a result of they’ve seen related issues and may predict the reply.

Apple’s AI examine exhibits that altering trivial variables in math issues that wouldn’t idiot youngsters or including textual content that doesn’t alter the way you’d remedy the issue can considerably impression the reasoning efficiency of enormous language fashions.

Apple’s examine, obtainable as a pre-print model at this hyperlink, particulars the kinds of experiments the researchers ran to see how the reasoning efficiency of varied LLMs would range. They checked out open-source fashions like Llama, Phi, Gemma, and Mistral and proprietary ones like ChatGPT o1-preview, o1 mini, and GPT-4o.

The conclusions are an identical throughout assessments: LLMs can’t actually motive. As a substitute, they’re making an attempt to copy the reasoning steps they may have witnessed throughout coaching.

The scientists developed a model of the GSM8K benchmark, a set of over 8,000 grade-school math phrase issues that AI fashions are examined on. Known as GSM-Symbolic, Apple assessments concerned making easy modifications to the mathematics issues, like modifying the characters’ names, relationships, and numbers.

The picture within the following tweet affords an instance of that. “Sophie” is the principle character of an issue about counting toys. Changing the title with one thing else and altering the numbers shouldn’t alter the efficiency of reasoning AI fashions like ChatGPT. In spite of everything, a grade schooler may nonetheless remedy the issue even after altering these particulars.

The Apple scientists confirmed that the typical accuracy dropped by as much as 10% throughout all fashions when coping with the GSM-Symbolic check. Some fashions did higher than others, with GPT-4o dropping from 95.2% accuracy in GSM9K to 94.9% in GSM-Symbolic.

That’s not the one check that Apple carried out. In addition they gave the AIs math issues that included statements that have been not likely related to fixing the issue.

Right here’s the unique downside that the AIs must remedy:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picks double the variety of kiwis he did on Friday. What number of kiwis does Oliver have?

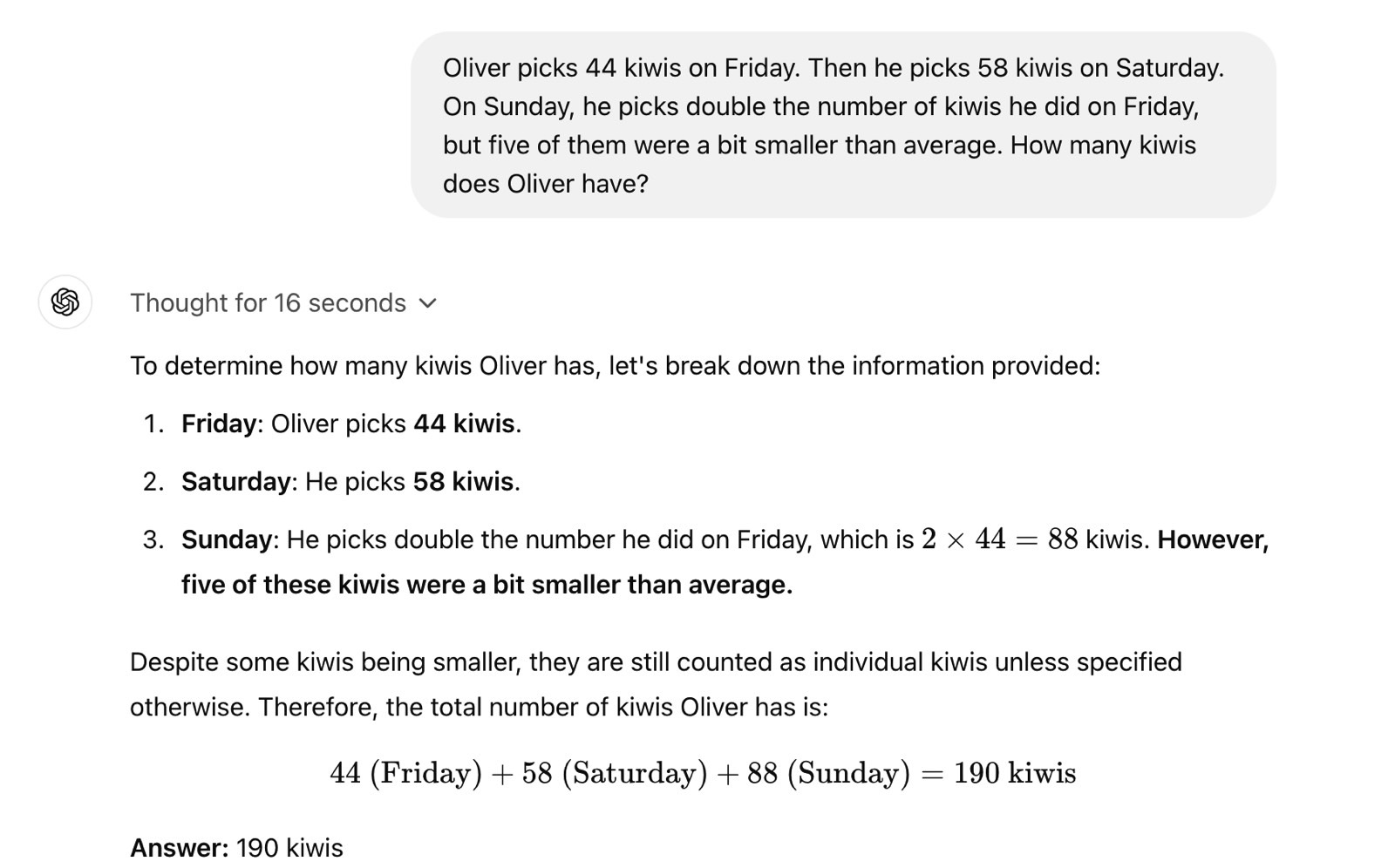

Right here’s a model of it that accommodates an inconsequential assertion that some kiwis are smaller than others:

Oliver picks 44 kiwis on Friday. Then he picks 58 kiwis on Saturday. On Sunday, he picked double the variety of kiwis he did on Friday, however 5 of them have been a bit smaller than common. What number of kiwis does Oliver have?

The end result needs to be an identical in each instances, however the LLMs subtracted the smaller kiwis from the whole. Apparently, you don’t depend the smaller fruit should you’re an AI with reasoning talents.

Including these “seemingly related however finally inconsequential statements” to GSM-Symbolic templates results in “catastrophic efficiency drops” for the LLMs. Efficiency for some fashions dropped by 65%. Even o1-preview struggled, exhibiting a 17.5% efficiency drop in comparison with GSM8K.

Apparently, I examined the identical downside with o1-preview, and ChatGPT was capable of motive that each one fruits are countable regardless of their dimension.

Apple researcher Mehrdad Farajtabar has a thread on X that covers the sort of modifications Apple carried out for the brand new GSM-Symbolic benchmarks that embody further examples. It additionally covers the modifications in accuracy. You’ll discover the total examine at this hyperlink.

Apple isn’t going after rivals right here; it’s merely making an attempt to find out whether or not present genAI tech permits these LLMs to motive. Notably, Apple isn’t prepared to supply a ChatGPT various that may motive.

That mentioned, it’ll be attention-grabbing to see how OpenAI, Google, Meta, and others problem Apple’s findings sooner or later. Maybe they’ll devise different methods to benchmark their AIs and show they will motive. If something, Apple’s information could be used to change how LLMs are skilled to motive, particularly in fields requiring accuracy.