[ad_1]

BBC

BBCFor Tyler Kay and Jordan Parlour, justice for what they posted on social media has come quick and heavy.

Kay, 26, and Parlour, 28, have been sentenced to 38 months and 20 months in jail respectively for stirring up racial hatred on-line in the course of the summer time riots.

Fees within the aftermath of the dysfunction felt like a major second, through which individuals needed to face real-life penalties for what they stated and did on-line.

There was widespread recognition that false claims and on-line hate contributed to the violence and racism on British streets in August. Of their wake, Prime Minister Keir Starmer stated social media “carries duty” for tackling misinformation.

Greater than 30 individuals discovered themselves arrested over social media posts. From what I’ve discovered, at the least 17 of these have been charged.

The police could have deemed that a few of these investigated didn’t meet the brink for criminality. And in loads of instances, the authorized system could possibly be the incorrect technique to take care of social media posts.

However some posts that didn’t cross the road into criminality should still have had real-life penalties. So for individuals who made them, no day of reckoning.

And nor, it appears, for the social media giants whose algorithms, time and time once more, are accused of prioritising engagement over security, pushing content material whatever the response it might probably provoke.

Getty Pictures

Getty PicturesOn the time of the riots, I had puzzled whether or not this could possibly be the second that lastly modified the web panorama.

Now, although, I’m not so certain.

To make sense of the position of the social media giants in all this, it’s helpful to start out by wanting on the instances of a dad in Pakistan and a businesswoman from Chester.

On X (previously often called Twitter) a pseudo-news web site known as Channel3Now posted a false title of the 17-year-old charged over the murders of three ladies in Southport. This false title was then broadly quoted by others.

One other poster who shared the false title on X was Bernadette Spofforth, a 55-year-old from Chester with greater than 50,000 followers. She had beforehand shared posts elevating questions on lockdown and net-zero local weather change measures.

The posts from Channel3Now and Ms Spofforth additionally wrongly prompt the 17-year-old was an asylum seeker who had arrived within the UK by boat.

All this, mixed with additional unfaithful claims from different sources that the attacker was a Muslim, was broadly blamed for contributing to the riots – a few of which focused mosques and asylum seekers.

I discovered that Channel3Now was related to a person named Farhan Asif in Pakistan, in addition to a hockey participant in Nova Scotia and somebody who claimed to be known as Kevin. The location gave the impression to be a industrial operation trying to enhance views and promote adverts.

On the time, an individual claiming to be from Channel3Now’s administration instructed me that the publication of the false title “was an error, not intentional” and denied being the origin of that title.

And Ms Spofforth instructed me she deleted her unfaithful publish concerning the suspect as quickly as she realised it was false. She additionally strongly denied she had made the title up.

So, what occurred subsequent?

Farhan Asif and Bernadette Spofforth have been each arrested over these posts not lengthy after I spoke to them.

Fees, nevertheless, have been dropped. Authorities in Pakistan stated they may not discover proof that Mr Asif was the originator of the faux title. Cheshire police additionally determined to not cost Ms Spofforth as a result of “inadequate proof”.

Mr Farhan appears to have gone to floor. The Channel3Now web site and several other related social media pages have been eliminated.

Bernadette Spofforth, nevertheless, is now again posting recurrently on X. This week alone she’s had a couple of million views throughout her posts.

She says she has grow to be an advocate for freedom of expression since her arrest. She says: “As has now been proven, the concept that one single tweet could possibly be the catalyst for the riots which adopted the atrocities in Southport is solely not true.”

Specializing in these particular person instances can provide a priceless perception into who shares this type of content material and why.

However to get to the center of the issue, it’s essential to take an extra step again.

Whereas persons are answerable for their very own posts, I’ve discovered time and time once more that is essentially about how totally different social media websites work.

Selections made underneath the tenure of Elon Musk, the proprietor of X, are additionally a part of the story. These selections embody the power to buy blue ticks, which afford your posts larger prominence, and a brand new method to moderation that favours freedom of expression above all else.

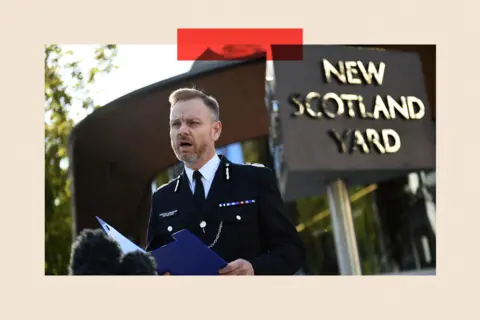

The UK’s head of counter-terror policing, Assistant Commissioner Matt Jukes, instructed me for the BBC’s Newscast that “X was an infinite driver” of posts that contributed to the summer time’s dysfunction.

Getty Pictures

Getty PicturesA group he oversees known as the Web Referral Unit seen “the disproportionate impact of sure platforms”, he stated.

He says there have been about 1,200 referrals – posts flagged to police by members of the general public – alone in relation to the riots. For him that was “simply the tip of the iceberg”. The unit noticed 13 occasions extra referrals in relation to X than TikTok.

Appearing on content material that’s unlawful and in breach of terror legal guidelines is, in a single sense, the simple bit. More durable to deal with are these posts that fall into what Mr Jukes calls the “lawful however terrible” class.

The unit flags such materials to websites it was posted on when it thinks it breaches their phrases and circumstances.

However Mr Jukes discovered Telegram, host of a number of giant teams through which dysfunction was organised and hate and disinformation have been shared, onerous to take care of.

In Mr Jukes’s view, Telegram has a “cast-iron dedication to not interact” with the authorities.

Elon Musk has accused legislation enforcement within the UK of attempting to police opinions about points similar to immigration and there have been accusations that motion taken in opposition to people posters has been disproportionate.

Mr Jukes responds: “I might say this to Elon Musk if he was right here, we weren’t arresting individuals for having opinions on immigration. [Police] went and arrested individuals for threatening to, or inciting others to, burn down mosques or resorts.”

However whereas accountability has been felt at “the very sharp finish” by those that participated within the dysfunction and posted hateful content material on-line, Mr Jukes stated “the individuals who make billions from offering these alternatives” to publish dangerous content material on social media “have probably not paid any value in any respect”.

He desires the On-line Security Act that comes into impact at first of 2025 bolstered so it might probably higher take care of content material that’s “lawful however terrible”.

Telegram instructed the BBC “there is no such thing as a place for calls to violence” on its platform and stated “moderators eliminated UK channels calling for dysfunction once they have been found” in the course of the riots.

“Whereas Telegram’s moderators take away tens of millions of items of dangerous content material every day, consumer numbers to virtually a billion causes sure rising pains in content material moderation, which we’re presently addressing,” a spokesperson stated.

I additionally contacted X, which didn’t reply to the factors the BBC raised.

X continues to share in its publicly obtainable tips that its precedence is defending and defending the consumer’s voice.

Virtually each investigation I do now comes again to the design of the social media websites and the way algorithms push content material that triggers a response, normally whatever the influence it might probably have.

Throughout the dysfunction algorithms amplified disinformation and hate to tens of millions, drawing in new recruits and incentivising individuals to share controversial content material for views and likes.

Why doesn’t that change? Effectively, from what I’ve discovered, the businesses must be compelled to change their enterprise fashions. And for politicians and regulators, that would show to be a really huge problem certainly.

BBC InDepth is the brand new house on the web site and app for the perfect evaluation and experience from our high journalists. Beneath a particular new model, we’ll carry you contemporary views that problem assumptions, and deep reporting on the most important points that will help you make sense of a fancy world. And we’ll be showcasing thought-provoking content material from throughout BBC Sounds and iPlayer too. We’re beginning small however considering huge, and we need to know what you assume – you’ll be able to ship us your suggestions by clicking on the button beneath.

[ad_2]